Yarrdachi & Backups: Priorities

Today I need to do some testing and figuring out how the WS2812s will sit next to their individual crystals. Then on to the front end of Yarrdachi some more but before that I should get some backup systems in place. I really should come up with a proper name for this project. Starting to feel like an asshole.

For the LEDs I need to do a little soldering I think. It occurs that I may need to set up a pi for this project already. I do have one on my desk mounted to a screen I guess. The main idea is to have it be a dashboard later to keep monitoring of servers and projects at an arms length without taxing this laptop... but I can us it for dev as well. I need to find some plugs for it and the screen.

Once I got some power cables I will need to test some WS2812s with some IO pins and libraries. Hopefully there's something with C or some other speedy code under the hood and just exists as a python API for speed. If not I don't think it'll matter all that much, the pins only need to operate at 60mhz max and I am sure they can. Since I think the Pi1 was 73khz and the pi 2 was like 2.5x more or so and this is a Pi3 here so I should be fine for IO speed. I might be worried if I had hundreds of lights to control from this one node but in reality it'll be closer to 20.

As it turns out I am without many plugs. In fact I do not have enough plugs to plug in my pi and screen here. Huh. Well, I do have an extension cord but the wiring in this place is dubious so I don't like using some of the outlets. Let's see...

Yeah, nah. Out of range of anything I trust. Huh. I may have to do this one in another room or work on it after dinner. The office has not enough outlet to safely support me working on this project as well as the servers and the dev hardware and 3D printers. Also need to solder, which is another plug I don't currently have. I did not realize how little outlet I have access to. Just the three here. Well, whatever. I guess I should be spending my office time either working on the main project or learning something anyway. I'll have to do this one in my spare time in the bedroom, just like the good old days.

In that case I am going to take a shower and figure out my backup procedure going forward.

So I need to back up files (config, images, etc) and DBs. One thing I can do is have my internal dev server run some kind of cron system and pull the backups to itself on a schedule. Then I could at least make secondaries of the smaller files and folders by pushing them to some kind of secure cloud system somewhere.

I could just write a series of shell scripts, then have cron run them on a schedule. This would be the simplest and most automated way.

Alright, so there's docker hub which I can use to back up most things. It's gonna cost $7 a month and is outside budget for this month, since we're still transitioning into the main server and shutting down extra crap we no longer need. Also not sure if I want to be spending $7 a month just for that.

So for now and possibly for the future I will have to spin up my own registry? Can I use a registry for backups? Let's find out. Hmm, that's odd. Alright, deeper dive time.

I did see one particularly useful piece of advice.

In order to prevent those dangling volumes, the trick is to create an additional docker container using the data volume you want to persist so that there will always be at least that docker container referencing the volume.

Thanks Thomasleveil on Stack Overflow. I will probably do that in future, considering what happened to most of this blog's posts.

However my problem is not currently that, though that is a problem and I now have a solution for it. What I might do is have these secondary projects BE the backup systems. This would mean not having them face the outside network but just the internal and creating these backups to a secondary volume that all share and write into their own folders. Then I just zip it up and upload it somewhere. This can by by big once a month backup. The other backups can do weekly or better. I should mirror my main DBs as well. Then take backups from both for redundancy. Data is very important in my projects, I need to protect it to the best of my ability.

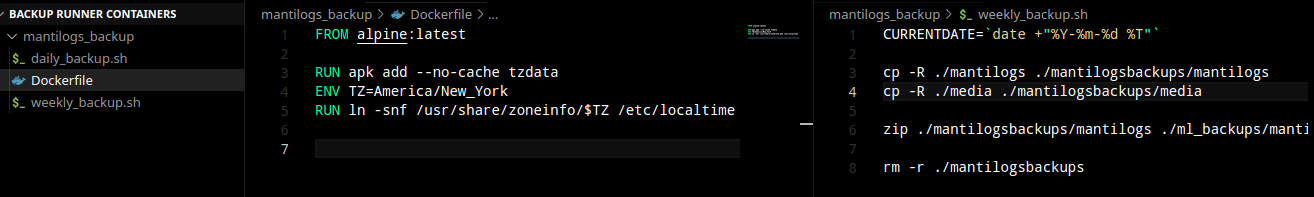

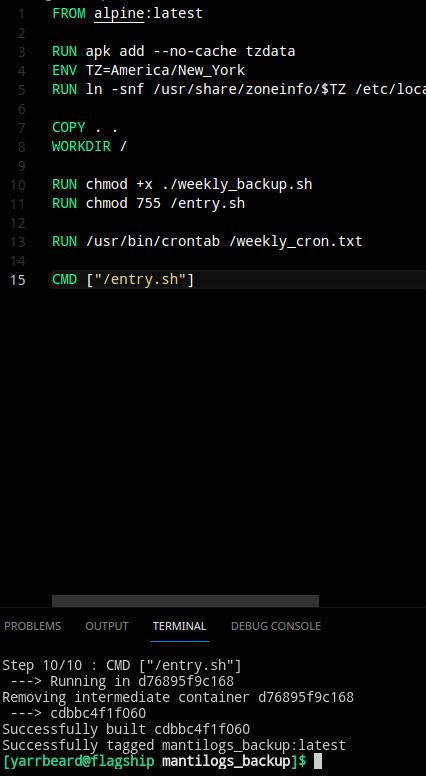

So the plan as of now is create a bunch of containers that share the volumes they aim to preserve, then have a shell script or several depending on the project that will copy the folder, zip it, delete the copy, or in the case of postgres, do a dump and name it after the date and type of dump. The usual stuff you set up in cron for backups. I also would like to do some mirroring but we'll see if we have time for that.

Step one; Figure out the volumes I need to preserve as well as the DBs. Step two; Figure out what parts of them I need to focus on and how often.

Step three;

Step 4; Profit?

TO THE LAB BOOK!

There's a list of things to back up, now to map those to volumes and figure out what things in each will need backing up.

Alright, now I know what folders to include in my backups. Next is to create the backup volume to hold my backups in. The volume will have many containers using it and each one will need it's own folder inside where it stores the backups for the container it is responsible for. I am sure there is probably something out there that can do this stuff for me and already exists in a dockerized container ready to use. I could look into these but I think there's more to learn by doing it this way and I ain't gonna walk away from a learning experience like this.

I've discovered I already did this for my valheim server, luckily. I bound the volumes to a place on disk where I can download the backups and saves from. I have some similar solutions for my Minecraft server so I can keep those backed up fairly easily. Next step is preserving the mantilogs files. Once a month I want to back up the images, once a week I want to back up the conf. Once a day I would like to mirror the DB to somewhere else and once a week I would like to do a pgdumpall and then compare the dumps to find any drift.

Alright, take dog out and start on the mantilogs backup container.

Now will it do what I want it to? That's the real question. It seems to have no problems on the docker side of things, but the shell side is what I am worried about. I guess I will have to run a test.

I need to upload this and test it. I suppose the smart move might be building a registry on the server and building it and deploying it to and from that.

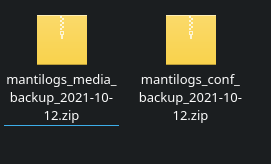

There, we've got the first step done. Weekly backups of pictures and confs. I will have to make sure it fires sunday though. Must make a note.

Next is a backup system for the DB, just gotta do a pgdumpall for now.

There's that done now.

Now I just wait til sunday to be sure it's working as intended and firing at the right time. That's mantilogs autobackup working.

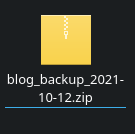

And there's the blog

Now I have backups in place for the main parts of the site that I tend to break the most. That did take a little longer than expected, it's 4:30pm. There was some troubleshooting with how the zip command worked, forgot the -r for recursive. Originally was doing a copy, then zip but I figure when this fires there shouldn't be anyone uploading images anyway.

If it does come to bite me in the ass, well, that's on me. I can point back to this moment and know exactly where I made a bad choice.

Anyway I will clean up a bit, back these up to a git repo and clock out. Almost time to cook.

Next step in the backups is automatically detecting changes on the volume and uploading some things to a cloud storage system. Maybe tomorrow?

Cheers.